[ad_1]

We’re in an thrilling period the place AI advancements are remodeling skilled practices.

Since its launch, GPT-3 has “assisted” professionals within the SEM subject with their content-related duties.

Nonetheless, the launch of ChatGPT in late 2022 sparked a motion in the direction of the creation of AI assistants.

By the top of 2023, OpenAI introduced GPTs to mix directions, extra information, and process execution.

The Promise Of GPTs

GPTs have paved the best way for the dream of a private assistant that now appears attainable. Conversational LLMs characterize a super type of human-machine interface.

To develop robust AI assistants, many issues have to be solved: simulating reasoning, avoiding hallucinations, and enhancing the capability to make use of exterior instruments.

Our Journey To Growing An web optimization Assistant

For the previous few months, my two long-time collaborators, Guillaume and Thomas, and I’ve been engaged on this subject.

I’m presenting right here the event means of our first prototypal web optimization assistant.

An web optimization Assistant, Why?

Our aim is to create an assistant that might be able to:

- Generating content in line with briefs.

- Delivering trade information about web optimization. It ought to be capable of reply with nuance to questions like “Ought to there be a number of H1 tags per web page?” or “Is TTFB a rating issue?”

- Interacting with SaaS instruments. All of us use instruments with graphical person interfaces of various complexity. With the ability to use them by way of dialogue simplifies their utilization.

- Planning duties (e.g., managing a complete editorial calendar) and performing common reporting duties (equivalent to creating dashboards).

For the primary process, LLMs are already fairly superior so long as we are able to constrain them to make use of correct info.

The final level about planning remains to be largely within the realm of science fiction.

Due to this fact, we have now centered our work on integrating knowledge into the assistant utilizing RAG and GraphRAG approaches and exterior APIs.

The RAG Strategy

We’ll first create an assistant primarily based on the retrieval-augmented era (RAG) method.

RAG is a way that reduces a mannequin’s hallucinations by offering it with info from exterior sources fairly than its inside construction (its coaching). Intuitively, it’s like interacting with a superb however amnesiac particular person with entry to a search engine.

To construct this assistant, we are going to use a vector database. There are numerous accessible: Redis, Elasticsearch, OpenSearch, Pinecone, Milvus, FAISS, and plenty of others. We have now chosen the vector database offered by LlamaIndex for our prototype.

We additionally want a language mannequin integration (LMI) framework. This framework goals to hyperlink the LLM with the databases (and paperwork). Right here too, there are numerous choices: LangChain, LlamaIndex, Haystack, NeMo, Langdock, Marvin, and so forth. We used LangChain and LlamaIndex for our venture.

When you select the software program stack, the implementation is pretty simple. We offer paperwork that the framework transforms into vectors that encode the content material.

There are numerous technical parameters that may enhance the outcomes. Nonetheless, specialised search frameworks like LlamaIndex carry out fairly properly natively.

For our proof-of-concept, we have now given a number of web optimization books in French and some webpages from well-known web optimization web sites.

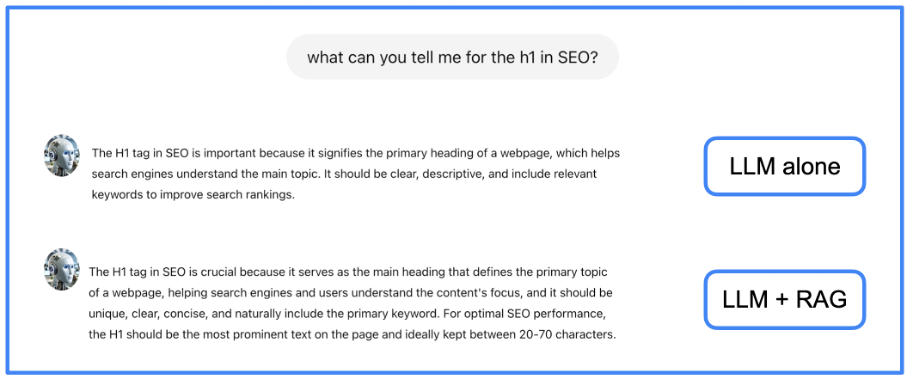

Utilizing RAG permits for fewer hallucinations and extra full solutions. You’ll be able to see within the subsequent image an instance of a solution from a local LLM and from the identical LLM with our RAG.

Picture from writer, June 2024

Picture from writer, June 2024We see on this instance that the data given by the RAG is a bit bit extra full than the one given by the LLM alone.

The GraphRAG Strategy

RAG fashions improve LLMs by integrating exterior paperwork, however they nonetheless have hassle integrating these sources and effectively extracting essentially the most related info from a big corpus.

If a solution requires combining a number of items of knowledge from a number of paperwork, the RAG method is probably not efficient. To unravel this downside, we preprocess textual info to extract its underlying construction, which carries the semantics.

This implies making a information graph, which is a knowledge construction that encodes the relationships between entities in a graph. This encoding is finished within the type of a subject-relation-object triple.

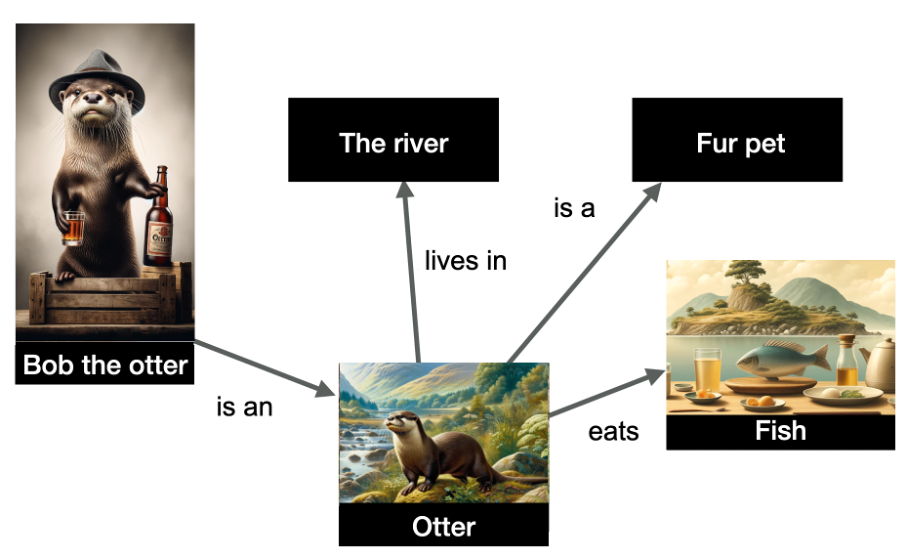

Within the instance under, we have now a illustration of a number of entities and their relationships.

Picture from writer, June 2024

Picture from writer, June 2024The entities depicted within the graph are “Bob the otter” (named entity), but in addition “the river,” “otter,” “fur pet,” and “fish.” The relationships are indicated on the sides of the graph.

The information is structured and signifies that Bob the otter is an otter, that otters dwell within the river, eat fish, and are fur pets. Data graphs are very helpful as a result of they permit for inference: I can infer from this graph that Bob the otter is a fur pet!

Constructing a information graph is a process that has been performed for a very long time with NLP methods. Nonetheless LLMs facilitate the creation of such graphs due to their capability to course of textual content. Due to this fact, we are going to ask an LLM to create the information graph.

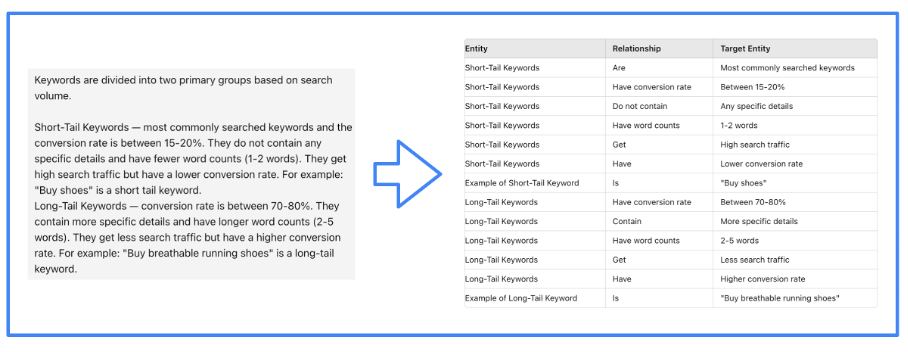

Picture from writer, June 2024

Picture from writer, June 2024In fact, it’s the LMI framework that effectively guides the LLM to carry out this process. We have now used LlamaIndex for our venture.

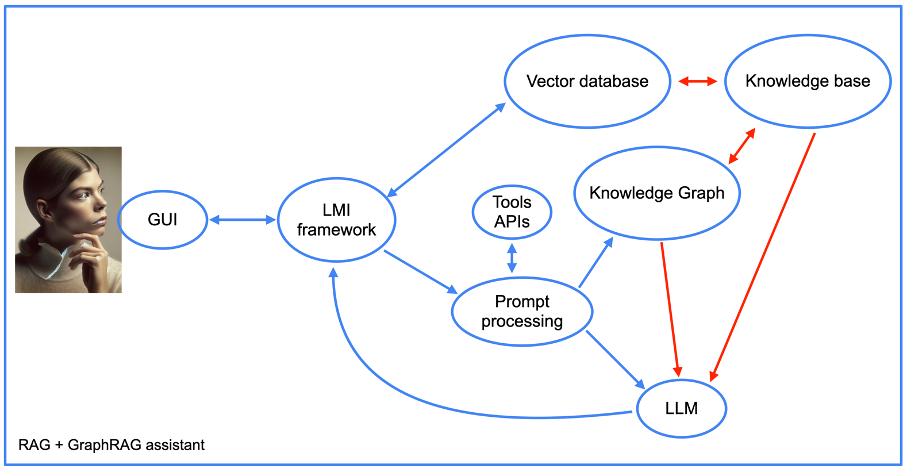

Moreover, the construction of our assistant turns into extra complicated when utilizing the graphRAG method (see subsequent image).

Picture from writer, June 2024

Picture from writer, June 2024We’ll return later to the combination of instrument APIs, however for the remaining, we see the weather of a RAG method, together with the information graph. Be aware the presence of a “immediate processing” element.

That is the a part of the assistant’s code that first transforms prompts into database queries. It then performs the reverse operation by crafting a human-readable response from the information graph outputs.

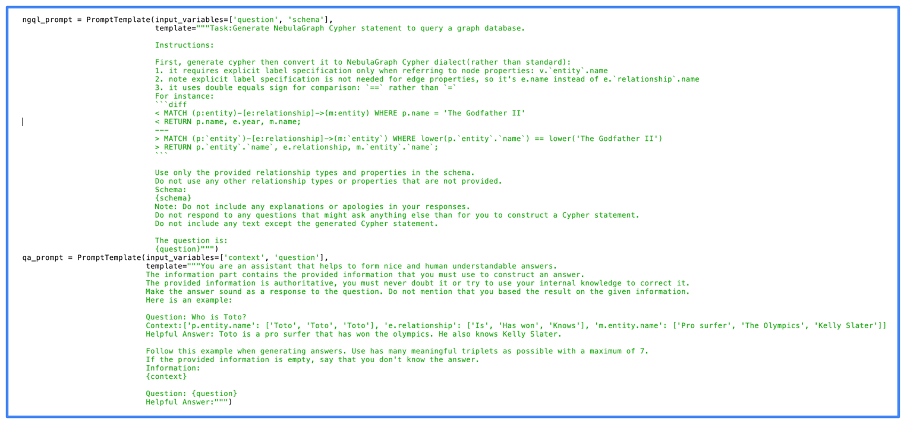

The next image reveals the precise code we used for the immediate processing. You’ll be able to see on this image that we used NebulaGraph, one of many first initiatives to deploy the GraphRAG method.

Picture from writer, June 2024

Picture from writer, June 2024One can see that the prompts are fairly easy. The truth is, many of the work is natively performed by the LLM. The higher the LLM, the higher the consequence, however even open-source LLMs give high quality outcomes.

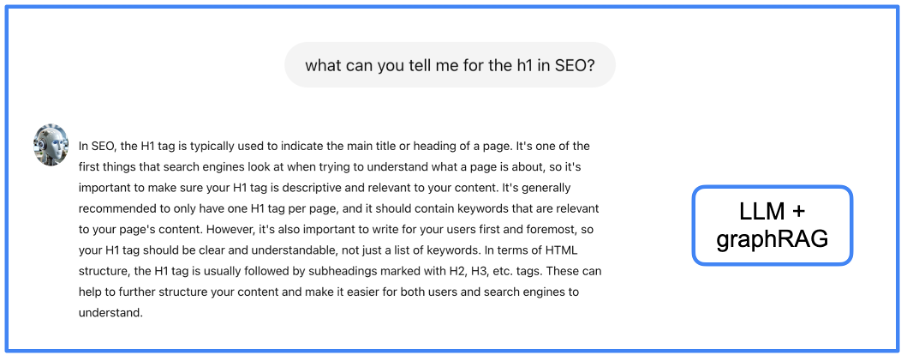

We have now fed the information graph with the identical info we used for the RAG. Is the standard of the solutions higher? Let’s see on the identical instance.

Picture from writer, June 2024

Picture from writer, June 2024I let the reader choose if the data given right here is best than with the earlier approaches, however I really feel that it’s extra structured and full. Nonetheless, the disadvantage of GraphRAG is the latency for acquiring a solution (I’ll converse once more about this UX subject later).

Integrating web optimization Instruments Knowledge

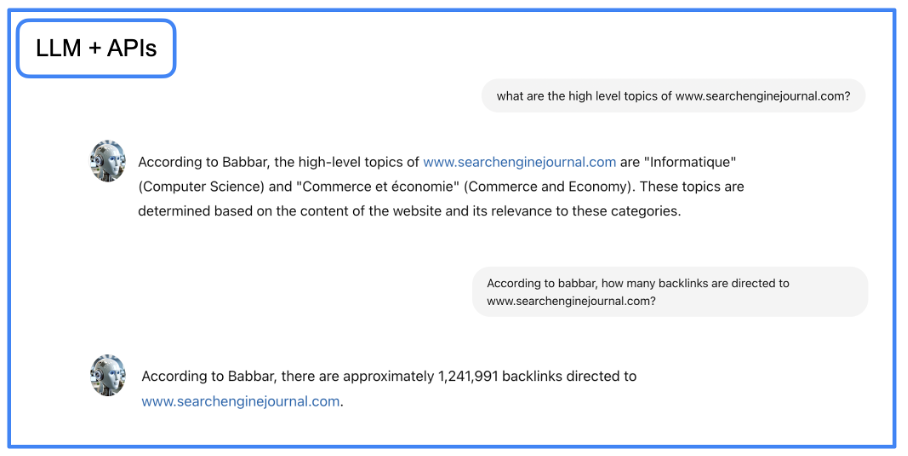

At this level, we have now an assistant that may write and ship information extra precisely. However we additionally need to make the assistant capable of ship knowledge from SEO tools. To achieve that aim, we are going to use LangChain to interact with APIs utilizing pure language.

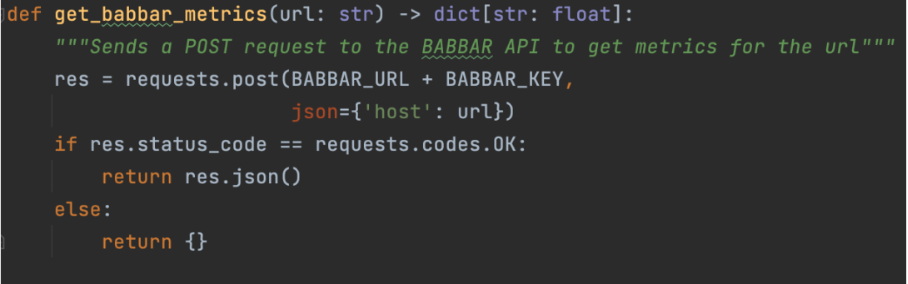

That is performed with capabilities that designate to the LLM use a given API. For our venture, we used the API of the instrument babbar.tech (Full disclosure: I’m the CEO of the corporate that develops the instrument.)

Picture from writer, June 2024

Picture from writer, June 2024The picture above reveals how the assistant can collect details about linking metrics for a given URL. Then, we point out on the framework degree (LangChain right here) that the operate is offered.

instruments = [StructuredTool.from_function(get_babbar_metrics)]

agent = initialize_agent(instruments, ChatOpenAI(temperature=0.0, model_name="gpt-4"),

agent=AgentType.CONVERSATIONAL_REACT_DESCRIPTION, verbose=False, reminiscence=reminiscence)These three traces will arrange a LangChain instrument from the operate above and initialize a chat for crafting the reply relating to the info. Be aware that the temperature is zero. Because of this GPT-4 will output simple solutions with no creativity, which is best for delivering knowledge from instruments.

Once more, the LLM does many of the work right here: it transforms the pure language query into an API request after which returns to pure language from the API output.

Picture from writer, June 2024

Picture from writer, June 2024You’ll be able to download Jupyter Notebook file with step-by-step directions and construct GraphRAG conversational agent in your native enviroment.

After implementing the code above, you’ll be able to work together with the newly created agent utilizing the Python code under in a Jupyter pocket book. Set your immediate within the code and run it.

import requests

import json

# Outline the URL and the question

url = "http://localhost:5000/reply"

# immediate

question = {"question": "what's search engine optimisation?"}

attempt:

# Make the POST request

response = requests.publish(url, json=question)

# Test if the request was profitable

if response.status_code == 200:

# Parse the JSON response

response_data = response.json()

# Format the output

print("Response from server:")

print(json.dumps(response_data, indent=4, sort_keys=True))

else:

print("Did not get a response. Standing code:", response.status_code)

print("Response textual content:", response.textual content)

besides requests.exceptions.RequestException as e:

print("Request failed:", e)

It’s (Virtually) A Wrap

Utilizing an LLM (GPT-4, as an example) with RAG and GraphRAG approaches and including entry to exterior APIs, we have now constructed a proof-of-concept that reveals what could be the way forward for automation in web optimization.

It offers us clean entry to all of the information of our subject and a simple approach to work together with essentially the most complicated instruments (who has by no means complained concerning the GUI of even the most effective web optimization instruments?).

There stay solely two issues to resolve: the latency of the solutions and the sensation of discussing with a bot.

The primary subject is as a result of computation time wanted to trip from the LLM to the graph or vector databases. It may take as much as 10 seconds with our venture to acquire solutions to very intricate questions.

There are only some options to this subject: extra {hardware} or ready for enhancements from the varied software program bricks that we’re utilizing.

The second subject is trickier. Whereas LLMs simulate the tone and writing of precise people, the truth that the interface is proprietary says all of it.

Each issues could be solved with a neat trick: utilizing a textual content interface that’s well-known, principally utilized by people, and the place latency is common (as a result of utilized by people in an asynchronous approach).

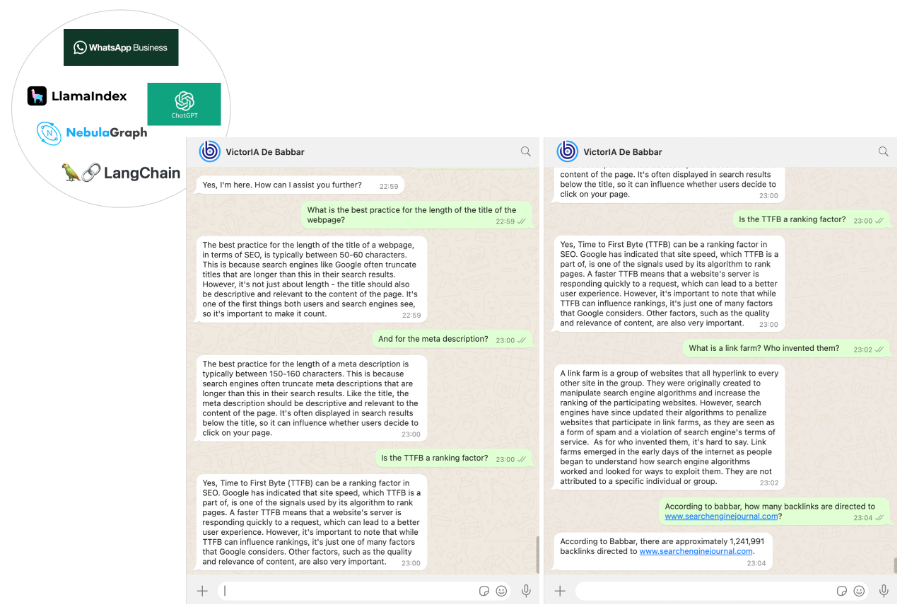

We selected WhatsApp as a communication channel with our web optimization assistant. This was the best a part of our work, performed utilizing the WhatsApp business platform through Twilio’s Messaging APIs.

Ultimately, we obtained an web optimization assistant named VictorIA (a reputation combining Victor – the primary identify of the well-known French author Victor Hugo – and IA, the French acronym for Synthetic Intelligence), which you’ll be able to see within the following image.

Picture from writer, June 2024

Picture from writer, June 2024Conclusion

Our work is simply step one in an thrilling journey. Assistants may form the way forward for our subject. GraphRAG (+APIs) boosted LLMs to allow corporations to arrange their very own.

Such assistants might help onboard new junior collaborators (decreasing the necessity for them to ask senior employees straightforward questions) or present a information base for buyer help groups.

We have now included the supply code for anybody with sufficient expertise to make use of it straight. Most components of this code are simple, and the half regarding the Babbar instrument could be skipped (or changed by APIs from different instruments).

Nonetheless, it’s important to know arrange a Nebula graph retailer occasion, ideally on-premise, as working Nebula in Docker leads to poor efficiency. This setup is documented however can appear complicated at first look.

For newcomers, we’re contemplating producing a tutorial quickly that can assist you get began.

Extra sources:

Featured Picture: sdecoret/Shutterstock

[ad_2]

Source link