[ad_1]

Over the past week, I noticed many arguments towards digging deep into the two,596 pages.

However the one query we should always ask ourselves is, “How can I check and study as a lot as doable from these paperwork?”

search engine optimisation is an utilized science the place concept isn’t the tip purpose however the foundation for experiments.

Enhance your abilities with Progress Memo’s weekly skilled insights. Subscribe for free!

14,000 Take a look at Concepts

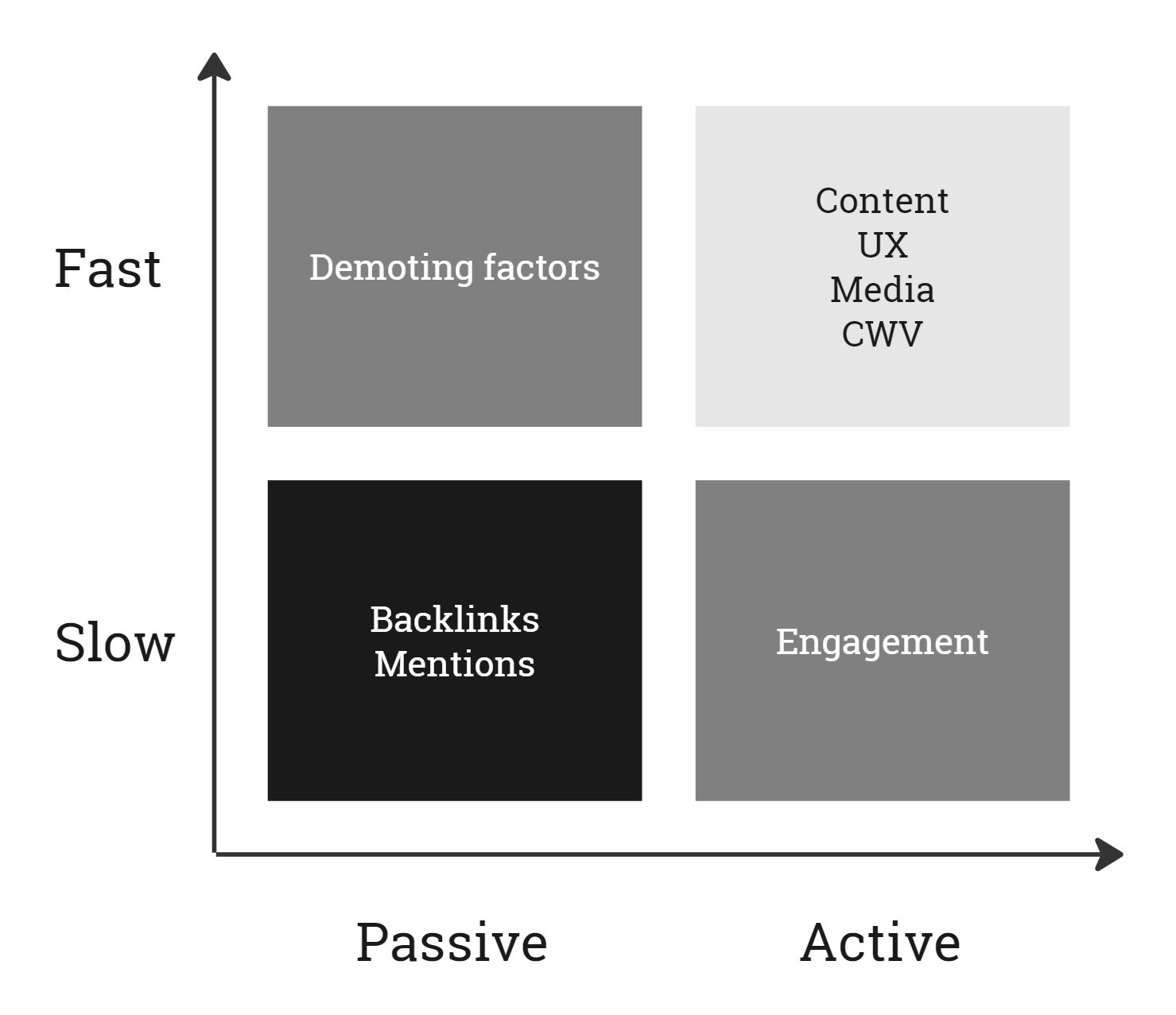

You couldn’t ask for a greater breeding floor for check concepts. However we can’t check each issue the identical means. They’ve differing types (quantity/integer: vary, Boolean: sure/no, string: phrase/record) and response occasions (that means the pace at which they result in a change in natural rank).

Because of this, we are able to A/B check quick and lively components whereas we have now to earlier than/after check sluggish and passive ones.

Prioritize exams by pace. (Picture Credit score: Kevin Indig)

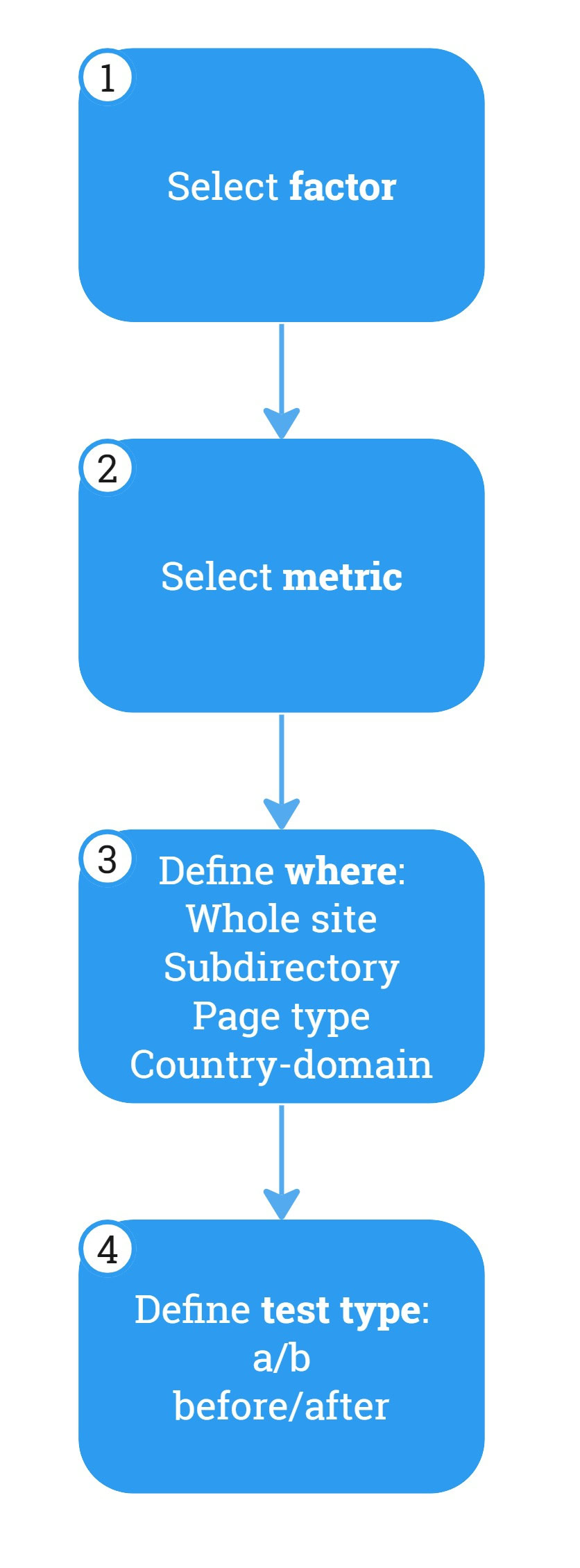

Prioritize exams by pace. (Picture Credit score: Kevin Indig)Take a look at rating components systematically by:

- Choosing a rating issue.

- Choosing the impacted (success) metric.

- Outline the place you check.

- Outline the kind of check.

Picture Credit score: Kevin Indig

Picture Credit score: Kevin IndigRating Elements

Most rating components within the leak are integers, that means they work on a spectrum, however some Boolean components are straightforward to check:

- Picture compression: Sure/No?

- Intrusive interstitials: Sure/No?

- Core Net Vitals: Sure/No?

Elements you possibly can immediately management:

- UX (navigation, font dimension, line spacing, picture high quality).

- Content material (contemporary, optimized titles, not duplicative, wealthy in related entities, concentrate on one consumer intent, excessive effort, crediting unique sources, utilizing canonical types of a phrase as an alternative of slang, high-quality UGC, skilled creator).

- Consumer engagement (excessive charge of job completion).

Demoting (detrimental) rating components:

- Hyperlinks from low-quality pages and domains.

- Aggressive anchor textual content (except you’ve gotten an especially sturdy hyperlink profile).

- Poor navigation.

- Poor consumer indicators.

Elements you possibly can solely affect passively:

- Title match and relevance between supply and linked doc.

- Hyperlink clicks.

- Hyperlinks from new and trusted pages.

- Area authority.

- Model mentions.

- Homepage PageRank.

Begin with an evaluation of your efficiency within the space you need to check in. An easy use case could be Core Net Vitals.

Metrics

Choose the suitable metric for the suitable issue primarily based on the outline within the leaked doc or your understanding of how an element may affect a metric:

- Crawl charge.

- Indexing (Sure/No).

- Rank (for important key phrase).

- Click on-through charge (CTR).

- Engagement.

- Key phrases a web page ranks for.

- Natural clicks.

- Impressions.

- Wealthy snippets.

The place To Take a look at

Discover the suitable place to check:

- In case you’re skeptical, use a country-specific area or a website the place you possibly can check with low danger. When you have a website in lots of languages, you possibly can roll out modifications primarily based on the leaks in a single nation and examine relative efficiency towards your core nation.

- You may restrict exams to a one-page kind or subdirectory to isolate the affect in addition to you possibly can.

- Restrict exams to pages addressing a particular kind of key phrase (e.g., “Greatest X”) or consumer intent (e.g., ”Learn critiques”).

Some rating components are sitewide indicators, like website authority, and others are page-specific, like click-through charges.

Issues

Rating components can work with or towards one another since they’re a part of an equation.

People are notoriously dangerous at intuitively understanding features with many variables, which implies we almost certainly underestimate how a lot goes into reaching a excessive rank rating, but in addition how a couple of variables can considerably affect the end result.

The excessive complexity of the connection between rating components shouldn’t preserve us from experimenting.

Aggregators can check simpler than Integrators as a result of they’ve extra comparable pages that result in extra important outcomes. Integrators, which must create content material themselves, have variations between each web page that dilute check outcomes.

My favourite check: Probably the greatest issues you are able to do on your understanding of search engine optimisation is scoring rating components by your personal notion after which systematically problem and check your assumptions. Create a spreadsheet with every rating issue, give it a quantity between zero and one primarily based in your thought of its significance, and multiply all components.

Monitoring Techniques

Testing solely offers us an preliminary reply to the significance of rating components. Monitoring permits us to measure relationships over time and are available to extra strong conclusions.

The thought is to trace metrics that mirror rating components, like CTR may mirror title optimization, and chart them over time to see whether or not optimization bears fruit. The thought no completely different from common (or what needs to be common) monitoring, besides for brand spanking new metrics.

You may construct monitoring programs in:

- Looker.

- Amplitude.

- Mixpanel.

- Tableau.

- Domo.

- Geckoboard.

- GoodData.

- Energy BI.

The device isn’t as necessary as the suitable metrics and URL path.

Instance Metrics

Measure metrics by web page kind or a set of URLs over time to measure the affect of optimizations.

Word: I’m utilizing thresholds primarily based on my private expertise that it’s best to problem.

Consumer Engagement:

- Common variety of clicks on navigation.

- Common scroll depth.

- CTR (SERP to website).

Backlink High quality:

- % of hyperlinks with excessive topic-fit/title-fit between supply and goal.

- % of hyperlinks of pages which can be youthful than 1 yr.

- % of hyperlinks from pages that rank for a minimum of one key phrase within the prime 10.

Web page High quality:

- Common dwell time (in contrast between pages of the identical kind).

- % customers who spend a minimum of 30 seconds on the positioning.

- % of pages that rank within the prime 3 for his or her goal key phrase.

Website High quality:

- % of pages that drive natural visitors.

- % of zero-click URLs during the last 90 days.

- Ratio between listed and non-indexed pages.

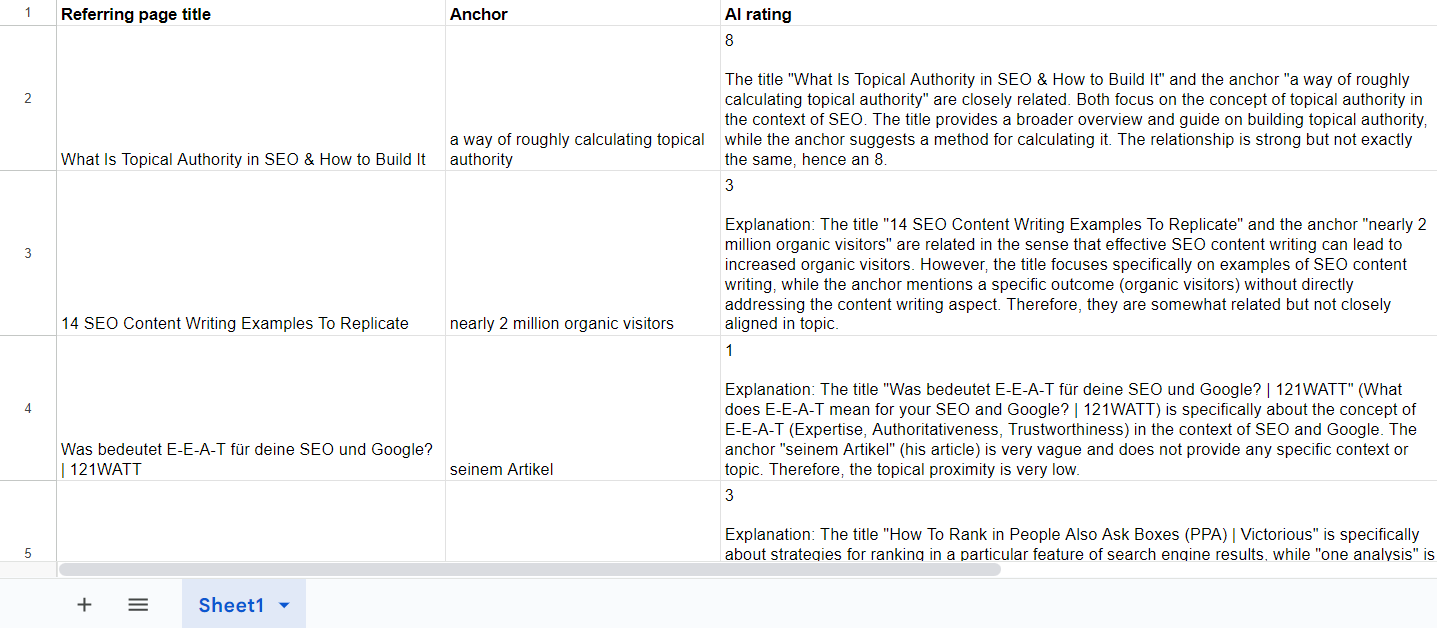

It’s ironic that the leak occurred shortly after Google began displaying AI for outcomes (AI Overviews) as a result of we are able to use AI to search out search engine optimisation gaps primarily based on the leak.

One instance is title matching between supply and goal for backlinks. With frequent search engine optimisation instruments, we are able to pull titles, anchor textual content, and surrounding content material of the hyperlink for referring and goal pages.

We are able to then charge the topical proximity or token overlap with frequent AI instruments, Google Sheets/Excel integrations, or native LLMs and fundamental prompts like “Price the topical proximity of the title (column B) in comparison with the anchor (column C) on a scale of 1 to 10 with 10 being precisely the identical and 1 having no relationship in any respect.”

Utilizing AI to charge title-match between hyperlink sources and targets. (Picture Credit score: Kevin Indig)

Utilizing AI to charge title-match between hyperlink sources and targets. (Picture Credit score: Kevin Indig)A Leak Of Their Personal

Google’s rating issue leak isn’t the primary time the inside works of an enormous platform algorithm turned obtainable to the general public:

1. In January 2023, a Yandex leak revealed many rating components that we additionally discovered within the newest Google leak. The underwhelming response shocked me simply as a lot again then as in the present day.

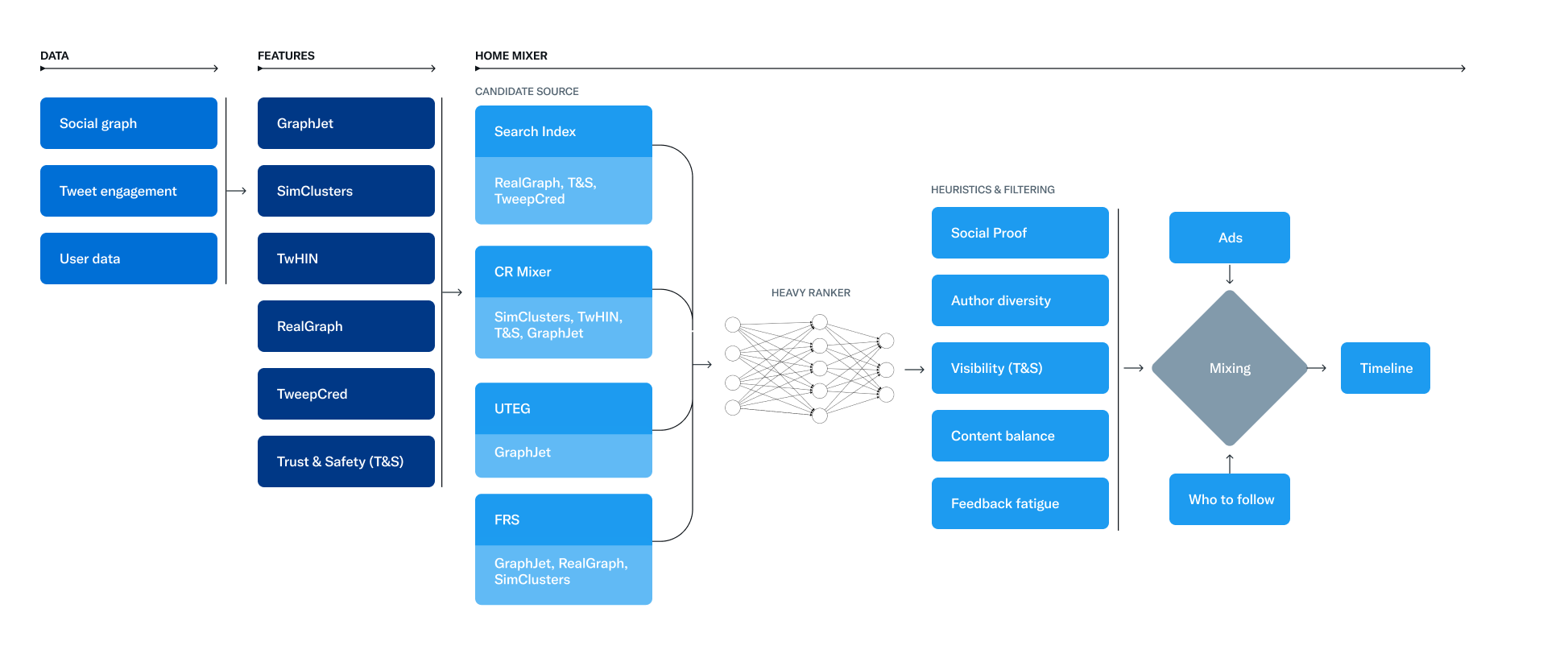

2. In March 2023, Twitter revealed most elements of its algorithm. Just like the Google leak, it lacks “context” between the components, but it surely was insightful nonetheless.

Twitter’s algorithm in a system chart. (Image Credit: Kevin Indig)

Twitter’s algorithm in a system chart. (Image Credit: Kevin Indig)3. Also in March 2023, Instagram’s chief Adam Mosseri published an in-depth follow-up post on how the platform ranks content in different parts of its product.

Despite the leaks, there are no known cases of a user or brand hacking the platform in a clean, ethical way.

The more a platform rewards engagement in its algorithm, the harder it is to game. And yet, the Google algorithm leak is quite interesting because it’s an intent-driven platform where users indicate their interest through searches instead of behavior.

As a result, knowing the ingredients for the cake is a big step forward, even without knowing how much of each to use.

I cannot understand why Google has been so secret about ranking factors all along. I’m not saying it should have published them in the degree of the leak. It could have incentivized a better web with fast, easy-to-navigate, good-looking, informative sites.

Instead, it left people guessing too much, which led to a lot of poor content, which led to algorithm updates that value many companies some huge cash.

System Diagram from Github.com

Featured Picture: Paulo Bobita/Search Engine Journal

[ad_2]

Source link