[ad_1]

This publish was sponsored by JetOctopus. The opinions expressed on this article are the sponsor’s personal.

For those who handle a big web site with over 10,000 pages, you’ll be able to possible admire the distinctive search engine marketing challenges that include such scale.

Certain, the normal instruments and techniques — key phrase optimization, hyperlink constructing, and so on. — are necessary to determine a robust basis and preserve primary search engine marketing hygiene.

Nonetheless, they could not absolutely tackle the technical complexities of Website Visibility for Searchbots and the dynamic wants of a giant enterprise web site.

That is the place log analyzers grow to be essential. An SEO log analyzer displays and analyzes server entry logs to offer you actual insights into how engines like google work together together with your web site. It permits you to take strategic motion that satisfies each search crawlers and customers, resulting in stronger returns in your efforts.

On this publish, you’ll study what a log analyzer is and the way it can allow your enterprise SEO technique to attain sustained success. However first, let’s take a fast take a look at what makes search engine marketing difficult for large web sites with 1000’s of pages.

The Distinctive search engine marketing Challenges For Giant Web sites

Managing search engine marketing for an internet site with over 10,000 pages isn’t only a step up in scale; it’s an entire totally different ball sport.

Counting on conventional search engine marketing techniques limits your website’s potential for natural development. You possibly can have the very best titles and content material in your pages, but when Googlebot can’t crawl them successfully, these pages will likely be ignored and will not get ranked ever.

For giant web sites, the sheer quantity of content material and pages makes it troublesome to make sure each (necessary) web page is optimized for visibility to Googlebot. Then, the added complexity of an elaborate website structure typically results in important crawl budget points. This implies Googlebot is lacking essential pages throughout its crawls.

Picture created by JetOctopus, Could 2024

Picture created by JetOctopus, Could 2024Moreover, huge web sites are extra susceptible to technical glitches — akin to surprising tweaks within the code from the dev crew — that may affect search engine marketing. This typically exacerbates different points like sluggish web page speeds because of heavy content material, damaged hyperlinks in bulk, or redundant pages that compete for a similar key phrases (key phrase cannibalization).

All in all, these points that include measurement necessitate a extra sturdy strategy to search engine marketing. One that may adapt to the dynamic nature of huge web sites and be sure that each optimization effort is extra significant towards the final word objective of enhancing visibility and driving visitors.

This strategic shift is the place the ability of an search engine marketing log analyzer turns into evident, offering granular insights that assist prioritize high-impact actions. The first motion being to raised perceive Googlebot prefer it’s your web site’s essential consumer — till your necessary pages are accessed by Googlebot, they gained’t rank and drive visitors.

What Is An search engine marketing Log Analyzer?

An search engine marketing log analyzer is basically a software that processes and analyzes the information generated by internet servers each time a web page is requested. It tracks how search engine crawlers work together with an internet site, offering essential insights into what occurs behind the scenes. A log analyzer can establish which pages are crawled, how typically, and whether or not any crawl points happen, akin to Googlebot being unable to entry necessary pages.

By analyzing these server logs, log analyzers assist search engine marketing groups perceive how an internet site is definitely seen by engines like google. This permits them to make exact changes to reinforce website efficiency, increase crawl effectivity, and in the end enhance SERP visibility.

Put merely, a deep dive into the logs knowledge helps uncover alternatives and pinpoint points that may in any other case go unnoticed in massive web sites.

However why precisely must you focus your efforts on treating Googlebot as your most necessary customer?

Why is crawl price range a giant deal?

Let’s look into this.

Optimizing Crawl Funds For Most search engine marketing Impression

Crawl price range refers back to the variety of pages a search engine bot — like Googlebot — will crawl in your website inside a given timeframe. As soon as a website’s price range is used up, the bot will cease crawling and transfer on to different web sites.

Crawl budgets differ for each web site and your website’s price range is decided by Google itself, by contemplating a variety of things akin to the positioning’s measurement, efficiency, frequency of updates, and hyperlinks. If you concentrate on optimizing these elements strategically, you’ll be able to enhance your crawl price range and pace up rating for brand new web site pages and content material.

As you’d anticipate, profiting from this price range ensures that your most necessary pages are continuously visited and listed by Googlebot. This usually interprets into higher rankings (offered your content material and consumer expertise are strong).

And right here’s the place a log analyzer software makes itself significantly helpful by offering detailed insights into how crawlers work together together with your website. As talked about earlier, it permits you to see which pages are being crawled and the way typically, serving to establish and resolve inefficiencies akin to low-value or irrelevant pages which can be losing beneficial crawl assets.

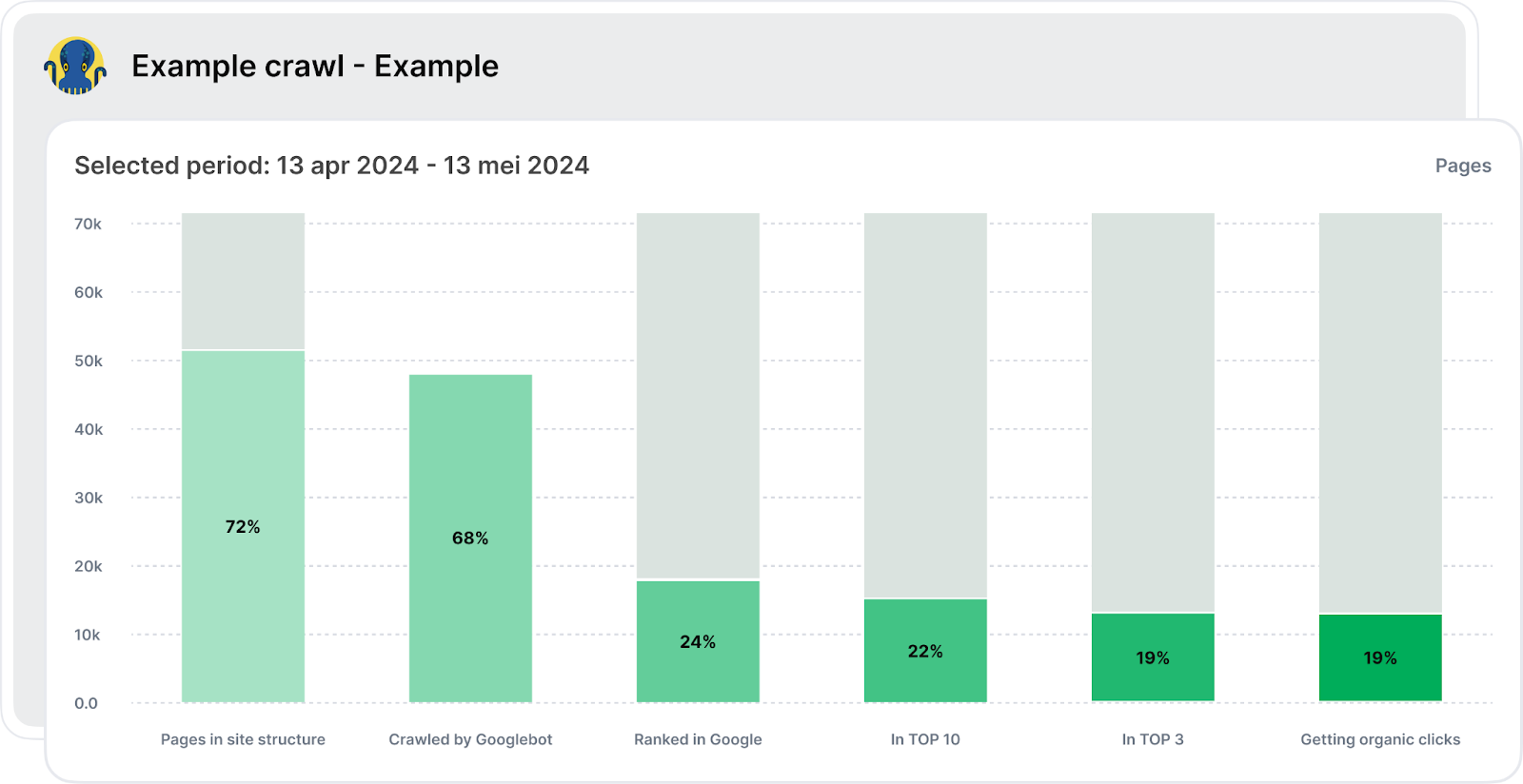

A sophisticated log analyzer like JetOctopus provides an entire view of all of the phases from crawling and indexation to getting natural clicks. Its SEO Funnel covers all the primary phases, out of your web site being visited by Googlebot to being ranked within the prime 10 and bringing in natural visitors.

Picture created by JetOctopus, Could 2024

Picture created by JetOctopus, Could 2024As you’ll be able to see above, the tabular view reveals what number of pages are open to indexation versus these closed from indexation. Understanding this ratio is essential as a result of if commercially necessary pages are closed from indexation, they won’t seem in subsequent funnel phases.

The following stage examines the variety of pages crawled by Googlebot, with “inexperienced pages” representing these crawled and throughout the construction, and “grey pages” indicating potential crawl price range waste as a result of they’re visited by Googlebot however not throughout the construction, presumably orphan pages or by chance excluded from the construction. Therefore, it’s important to research this a part of your crawl price range for optimization.

The later phases embrace analyzing what share of pages are ranked in Google SERPs, what number of of those rankings are within the prime 10 or prime three, and, lastly, the variety of pages receiving natural clicks.

General, the search engine marketing funnel provides you concrete numbers, with hyperlinks to lists of URLs for additional evaluation, akin to indexable vs. non-indexable pages and the way crawl price range waste is going on. It is a wonderful place to begin for crawl price range evaluation, permitting a method to visualize the large image and get insights for an impactful optimization plan that drives tangible search engine marketing development.

Put merely, by prioritizing high-value pages — making certain they’re free from errors and simply accessible to go looking bots — you’ll be able to enormously enhance your website’s visibility and rating.

Utilizing an search engine marketing log analyzer, you’ll be able to perceive precisely what ought to be optimized on pages which can be being ignored by crawlers, work on them, and thus appeal to Googlebot visits. A log analyzer advantages in optimizing different essential points of your web site:

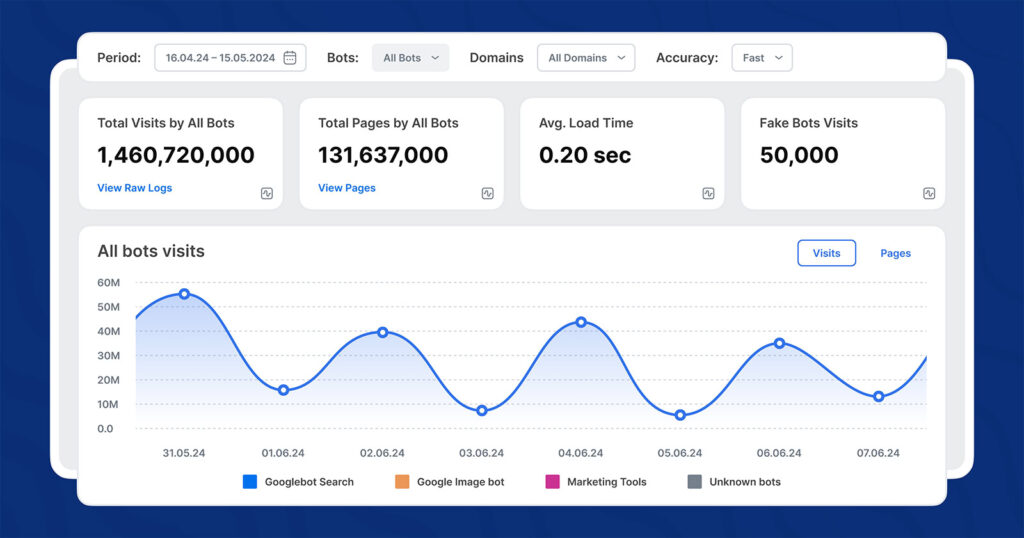

Picture created by JetOctopus, Could 2024

Picture created by JetOctopus, Could 2024- Detailed Evaluation of Bot Habits: Log analyzers permit you to dissect how search bots work together together with your website by inspecting elements just like the depth of their crawl, the variety of inside hyperlinks on a web page, and the phrase rely per web page. This detailed evaluation supplies you with the precise to-do objects for optimizing your website’s search engine marketing efficiency.

- Improves Inside Linking and Technical Efficiency: Log analyzers present detailed insights into the construction and well being of your website. They assist establish underperforming pages and optimize the inner hyperlinks placement, making certain a smoother consumer and crawler navigation. Additionally they facilitate the fine-tuning of content material to raised meet search engine marketing requirements, whereas highlighting technical points which will have an effect on website pace and accessibility.

- Aids in Troubleshooting JavaScript and Indexation Challenges: Massive web sites, particularly eCommerce, typically rely closely on JavaScript for dynamic content material. Within the case of JS web sites, the crawling course of is prolonged. A log analyzer can monitor how nicely search engine bots are in a position to render and index JavaScript-dependent content material, underlining potential pitfalls in real-time. It additionally identifies pages that aren’t being listed as meant, permitting for well timed corrections to make sure all related content material can rank.

- Helps Optimize Distance from Index (DFI): The idea of Distance from Index (DFI) refers back to the variety of clicks required to succeed in any given web page from the house web page. A decrease DFI is usually higher for search engine marketing because it means necessary content material is simpler to seek out, each by customers and search engine crawlers. Log analyzers assist map out the navigational construction of your website, suggesting adjustments that may cut back DFI and enhance the general accessibility of key content material and product pages.

In addition to, historic log knowledge supplied by a log analyzer will be invaluable. It helps make your search engine marketing efficiency not solely comprehensible but in addition predictable. Analyzing previous interactions permits you to spot traits, anticipate future hiccups, and plan simpler search engine marketing methods.

With JetOctopus, you profit from no quantity limits on logs, enabling complete evaluation with out the concern of lacking out on essential knowledge. This strategy is key in frequently refining your technique and securing your website’s prime spot within the fast-evolving landscape of search.

Actual-World Wins Utilizing Log Analyzer

Massive web sites in varied industries have leveraged log analyzers to achieve and preserve prime spots on Google for worthwhile key phrases, which has considerably contributed to their enterprise development.

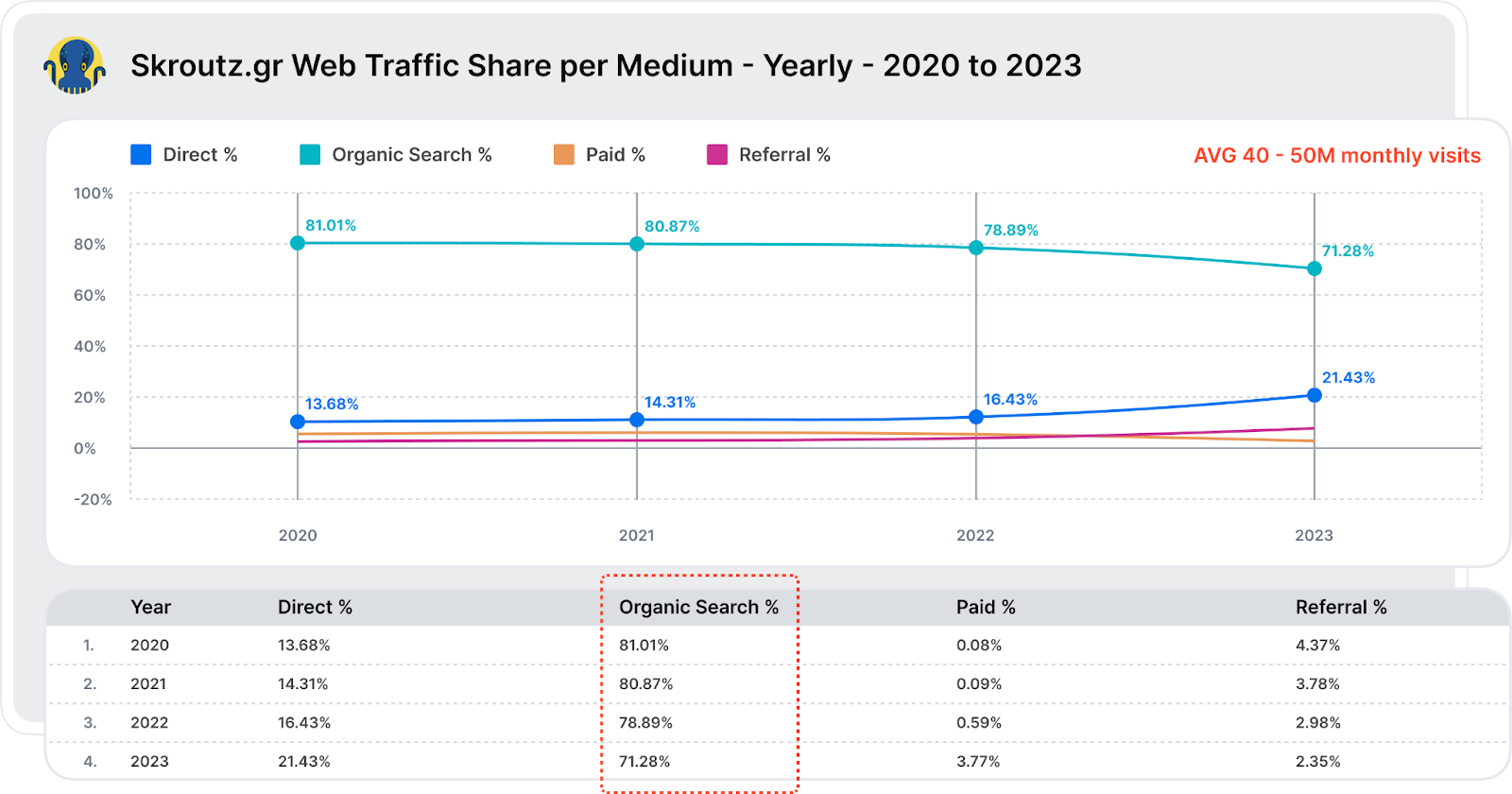

For instance, Skroutz, Greece’s largest market web site with over 1 million classes day by day, arrange a real-time crawl and log analyzer software that helped them know issues like:

- Does Googlebot crawl pages which have greater than two filters activated?

- How extensively does Googlebot crawl a very widespread class?

- What are the primary URL parameters that Googlebot crawls?

- Does Googlebot go to pages with filters like “Dimension,” that are usually marked as nofollow?

This potential to see real-time visualization tables and historic log knowledge spanning over ten months for monitoring Googlebot crawls successfully enabled Skroutz to seek out crawling loopholes and reduce index measurement, thus optimizing its crawl price range.

Ultimately, in addition they noticed a lowered time for brand new URLs to be listed and ranked — as a substitute of taking 2-3 months to index and rank new URLs, the indexing and rating section took just a few days.

This strategic strategy to technical search engine marketing utilizing log recordsdata has helped Skroutz cement its place as one of many prime 1000 web sites globally in response to SimilarWeb, and the fourth most visited web site in Greece (after Google, Fb, and Youtube) with over 70% share of its visitors from natural search.

Picture created by JetOctopus, Could 2024

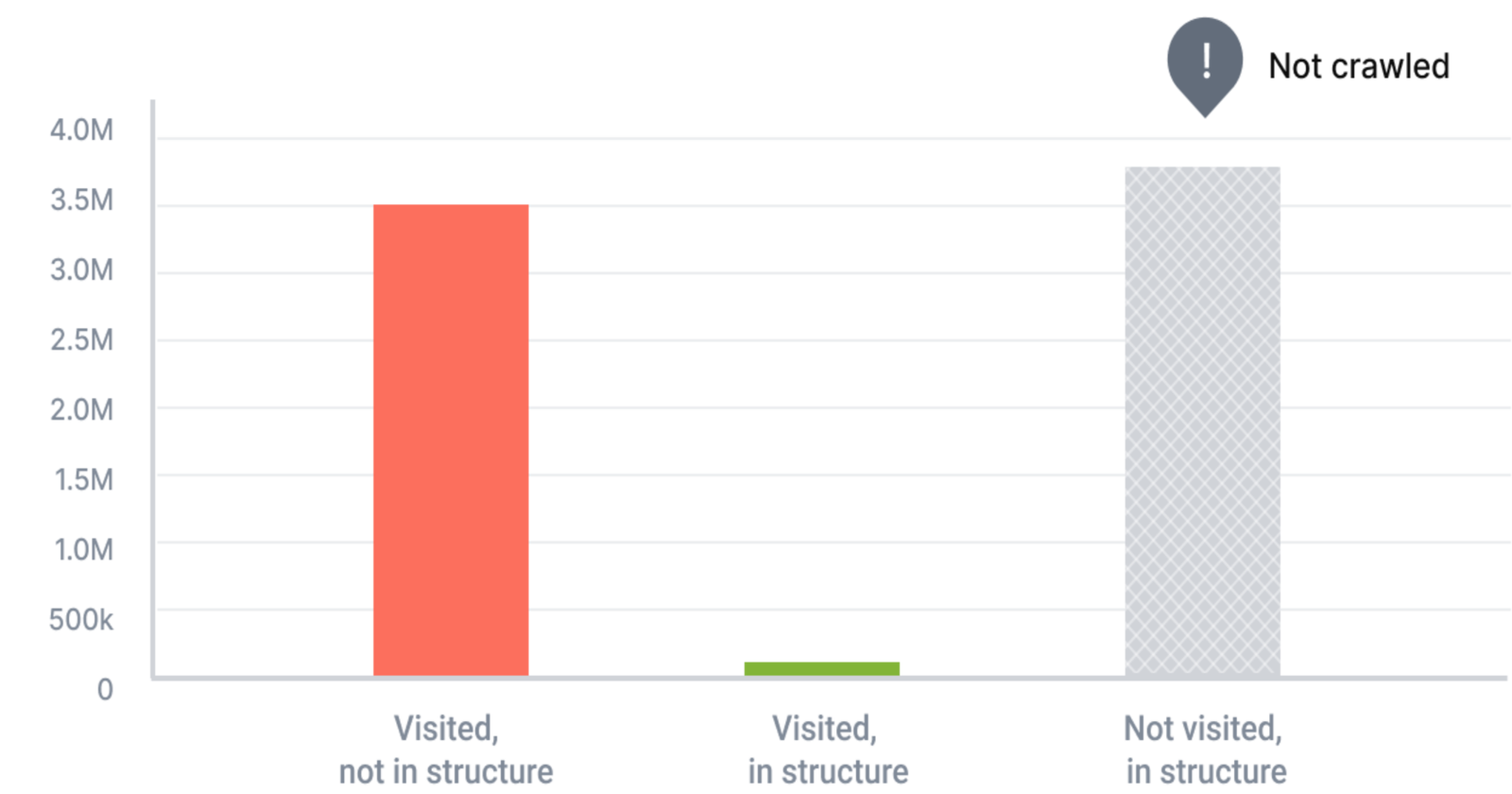

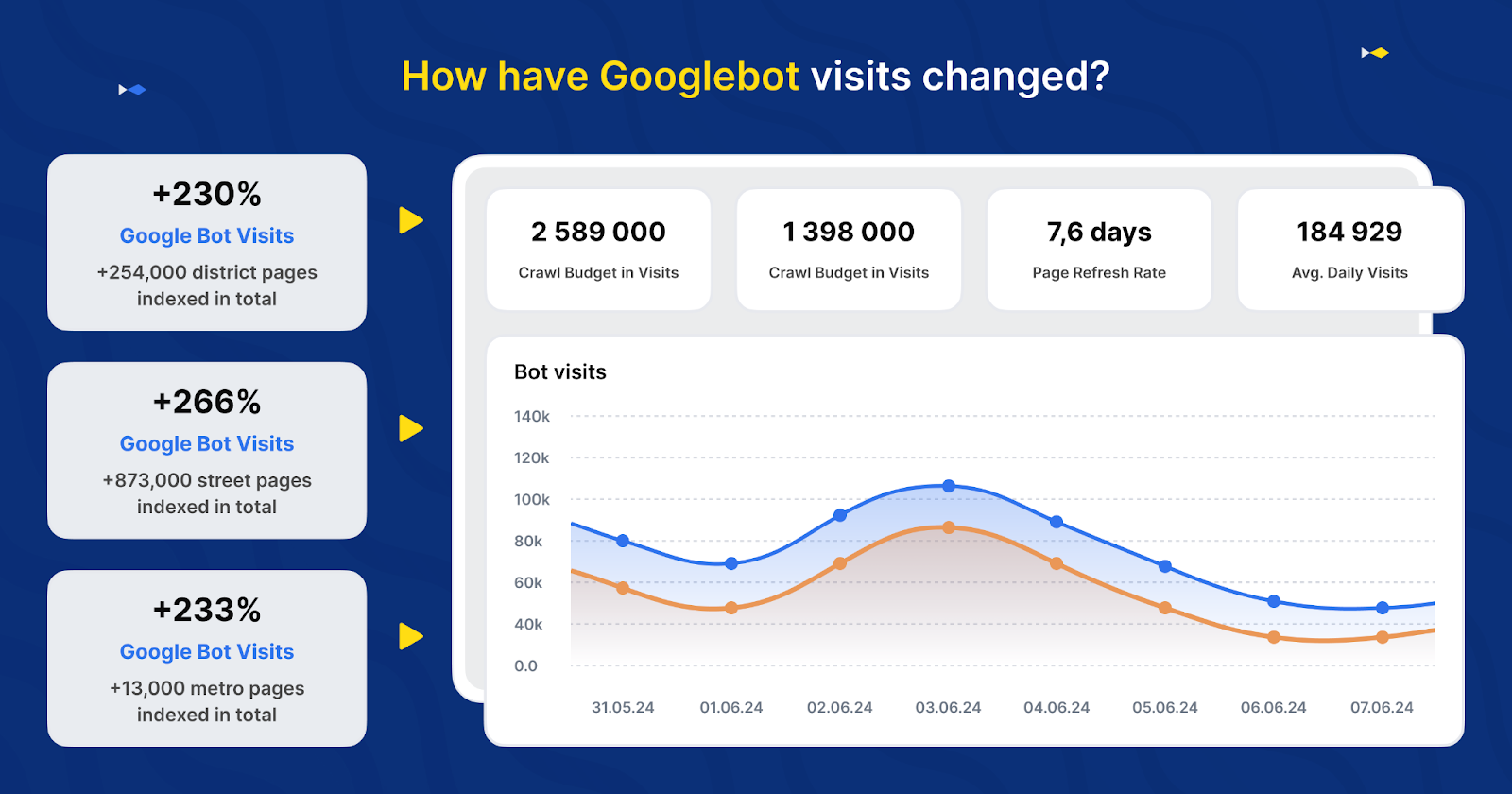

Picture created by JetOctopus, Could 2024One other working example is DOM.RIA, Ukraine’s widespread actual property and rental itemizing web site, which doubled the Googlebot visits by optimizing their web site’s crawl effectivity. As their website construction is large and elaborate, they wanted to optimize the crawl effectivity for Googlebot to make sure the freshness and relevance of content material showing in Google.

Initially, they carried out a brand new sitemap to enhance the indexing of deeper directories. Regardless of these efforts, Googlebot visits remained low.

Through the use of the JetOctopus to research their log recordsdata, DOM.RIA recognized and addressed points with their inside linking and DFI. They then created mini-sitemaps for poorly scanned directories (akin to for town, together with URLs for streets, districts, metro, and so on.) whereas assigning meta tags with hyperlinks to pages that Googlebot typically visits. This strategic change resulted in a greater than twofold enhance in Googlebot exercise on these essential pages inside two weeks.

Picture created by JetOctopus, Could 2024

Picture created by JetOctopus, Could 2024Getting Began With An search engine marketing Log Analyzer

Now that you already know what a log analyzer is and what it could possibly do for large web sites, let’s take a fast take a look at the steps concerned in logs evaluation.

Right here is an outline of utilizing an search engine marketing log analyzer like JetOctopus in your web site:

- Combine Your Logs: Start by integrating your server logs with a log evaluation software. This step is essential for capturing all knowledge associated to website visits, which incorporates each request made to the server.

- Determine Key Points: Use the log analyzer to uncover important points akin to server errors (5xx), sluggish load instances, and different anomalies that might be affecting consumer expertise and website efficiency. This step includes filtering and sorting by massive volumes of knowledge to concentrate on high-impact issues.

- Repair the Points: As soon as issues are recognized, prioritize and tackle these points to enhance website reliability and efficiency. This would possibly contain fixing damaged hyperlinks, optimizing slow-loading pages, and correcting server errors.

- Mix with Crawl Evaluation: Merge log evaluation knowledge with crawl knowledge. This integration permits for a deeper dive into crawl price range evaluation and optimization. Analyze how engines like google crawl your website and regulate your search engine marketing technique to make sure that your Most worthy pages obtain enough consideration from search bots.

And that’s how one can be sure that engines like google are effectively indexing your most necessary content material.

Conclusion

As you’ll be able to see, the strategic use of log analyzers is greater than only a technical necessity for large-scale web sites. Optimizing your website’s crawl effectivity with a log analyzer can immensely affect your SERP visibility.

For CMOs managing large-scale web sites, embracing a log analyzer and crawler toolkit like JetOctopus is like getting an additional tech search engine marketing analyst that bridges the hole between search engine marketing knowledge integration and natural visitors development.

Picture Credit

Featured Picture: Picture by JetOctopus Used with permission.

[ad_2]

Source link