[ad_1]

Migrating a big web site is at all times daunting. Large site visitors is at stake amongst many transferring elements, technical challenges and stakeholder administration.

Traditionally, one of the onerous duties in a migration plan has been redirect mapping. The painstaking strategy of matching URLs in your present website to the equal model on the brand new web site.

Luckily, this job that beforehand might contain groups of individuals combing by way of 1000’s of URLs will be drastically sped up with trendy AI fashions.

Do you have to use AI for redirect mapping?

The time period “AI” has turn out to be somebody conflated with “ChatGPT” during the last yr, so to be very clear from the outset, we’re not speaking about utilizing generative AI/LLM-based techniques to do your redirect mapping.

Whereas there are some duties that instruments like ChatGPT can help you with, equivalent to writing that tough regex for the redirect logic, the generative aspect that may trigger hallucinations might doubtlessly create accuracy points for us.

Benefits of utilizing AI for redirect mapping

Pace

The first benefit of utilizing AI for redirect mapping is the sheer pace at which it may be achieved. An preliminary map of 10,000 URLs may very well be produced inside a couple of minutes and human-reviewed inside just a few hours. Doing this course of manually for a single particular person would normally be days of labor.

Scalability

Utilizing AI to assist map redirects is a technique you should use on a website with 100 URLs or over 1,000,000. Massive websites additionally are usually extra programmatic or templated, making similarity matching extra correct with these instruments.

Effectivity

For bigger websites, a multi-person job can simply be dealt with by a single particular person with the right information, releasing up colleagues to help with different elements of the migration.

Accuracy

Whereas the automated technique will get some redirects “improper,” in my expertise, the general accuracy of redirects has been larger, because the output can specify the similarity of the match, giving guide reviewers a information on the place their consideration is most wanted

Disadvantages of utilizing AI for redirect mapping

Over-reliance

Utilizing automation instruments could make folks complacent and over-reliant on the output. With such an essential job, a human assessment is at all times required.

Coaching

The script is pre-written and the method is simple. Nevertheless, it is going to be new to many individuals and environments equivalent to Google Colab will be intimidating.

Output variance

Whereas the output is deterministic, the fashions will carry out higher on sure websites than others. Typically, the output can comprise “foolish” errors, that are apparent for a human to identify however tougher for a machine.

A step-by-step information for URL mapping with AI

By the tip of this course of, we’re aiming to provide a spreadsheet that lists “from” and “to” URLs by mapping the origin URLs on our dwell web site to the vacation spot URLs on our staging (new) web site.

For this instance, to maintain issues easy, we’ll simply be mapping our HTML pages, not further property equivalent to CSS or pictures, though that is additionally doable.

Instruments we’ll be utilizing

- Screaming Frog Web site Crawler: A robust and versatile web site crawler, Screaming Frog is how we gather the URLs and related metadata we want for the matching.

- Google Colab: A free cloud service that makes use of a Jupyter pocket book setting, permitting you to run a variety of languages straight out of your browser with out having to put in something domestically. Google Colab is how we’re going to run our Python scripts to carry out the URL matching.

- Automated Redirect Matchmaker for Site Migrations: The Python script by Daniel Emery that we’ll be operating in Colab.

Step 1: Crawl your dwell web site with Screaming Frog

You’ll must carry out an ordinary crawl in your web site. Relying on how your web site is constructed, this may increasingly or could not require a JavaScript crawl. The purpose is to provide an inventory of as many accessible pages in your website as doable.

Step 2: Export HTML pages with 200 Standing Code

As soon as the crawl has been accomplished, we wish to export all the discovered HTML URLs with a 200 Standing Code.

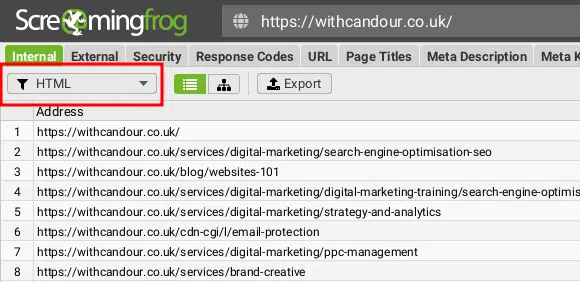

Firstly, within the high left-hand nook, we have to choose “HTML” from the drop-down menu.

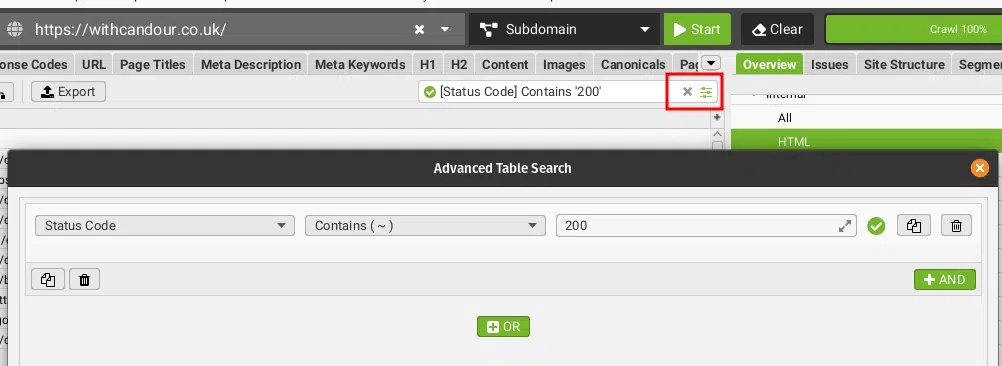

Subsequent, click on the sliders filter icon within the high proper and create a filter for Standing Codes containing 200.

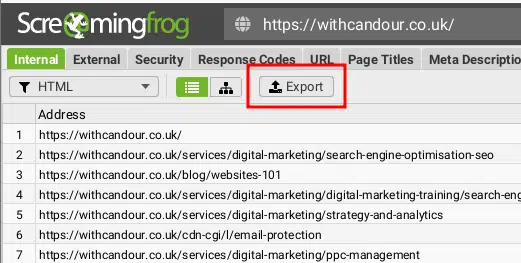

Lastly, click on on Export to save lots of this knowledge as a CSV.

This can give you an inventory of our present dwell URLs and all the default metadata Screaming Frog collects about them, equivalent to Titles and Header Tags. Save this file as origin.csv.

Essential observe: Your full migration plan must account for issues equivalent to current 301 redirects and URLs which will get site visitors in your website that aren’t accessible from an preliminary crawl. This information is meant solely to show a part of this URL mapping course of, it isn’t an exhaustive information.

Step 3: Repeat steps 1 and a couple of on your staging web site

We now want to assemble the identical knowledge from our staging web site, so we now have one thing to match to.

Relying on how your staging website is secured, you could want to make use of options equivalent to Screaming Frog’s forms authentication if password protected.

As soon as the crawl has accomplished, you need to export the info and save this file as vacation spot.csv.

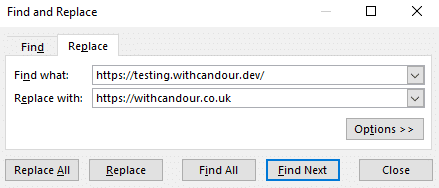

Elective: Discover and change your staging website area or subdomain to match your dwell website

It’s doubtless your staging web site is both on a distinct subdomain, TLD and even area that gained’t match our precise vacation spot URL. Because of this, I’ll use a Discover and Change operate on my vacation spot.csv to alter the trail to match the ultimate dwell website subdomain, area or TLD.

For instance:

- My dwell web site is

https://withcandour.co.uk/(origin.csv) - My staging web site is

https://testing.withcandour.dev/(vacation spot.csv) - The location is staying on the identical area; it’s only a redesign with totally different URLs, so I’d open vacation spot.csv and discover any occasion of

https://testing.withcandour.devand change it withhttps://withcandour.co.uk.

This additionally means when the redirect map is produced, the output is right and solely the ultimate redirect logic must be written.

Step 4: Run the Google Colab Python script

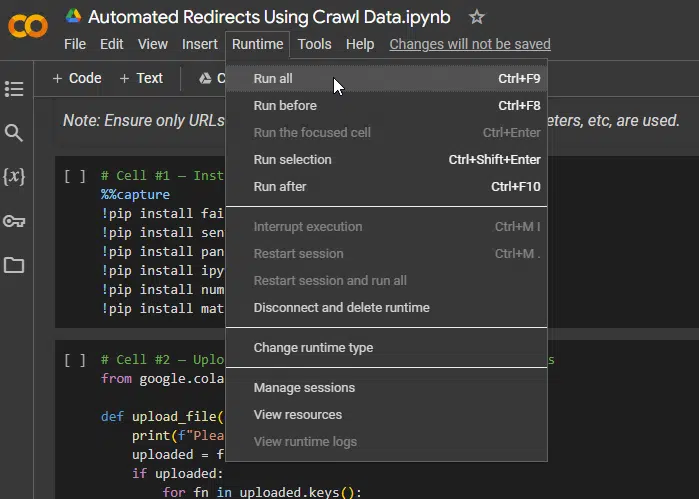

If you navigate to the script in your browser, you will notice it’s damaged up into a number of code blocks and hovering over each provides you with a”play” icon. That is should you want to execute one block of code at a time.

Nevertheless, the script will work completely simply executing all the code blocks, which you are able to do by going to the Runtime’menu and choosing Run all.

There are not any stipulations to run the script; it should create a cloud setting and on the primary execution in your occasion, it should take round one minute to put in the required modules.

Every code block may have a small inexperienced tick subsequent to it as soon as it’s full, however the third code block would require your enter to proceed and it’s straightforward to overlook as you’ll doubtless must scroll right down to see the immediate.

Get the day by day publication search entrepreneurs depend on.

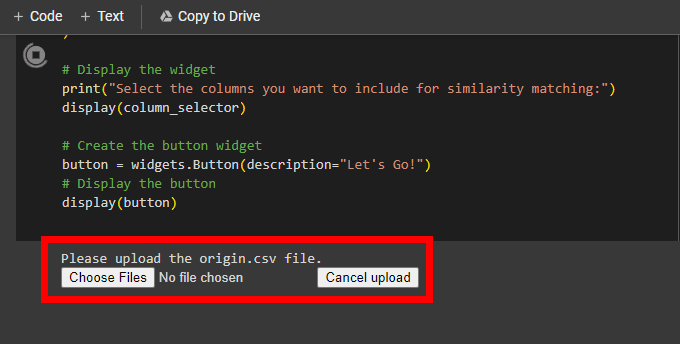

Step 5: Add origin.csv and vacation spot.csv

When prompted, click on Select recordsdata and navigate to the place you saved your origin.csv file. After getting chosen this file, it should add and you’ll be prompted to do the identical on your vacation spot.csv.

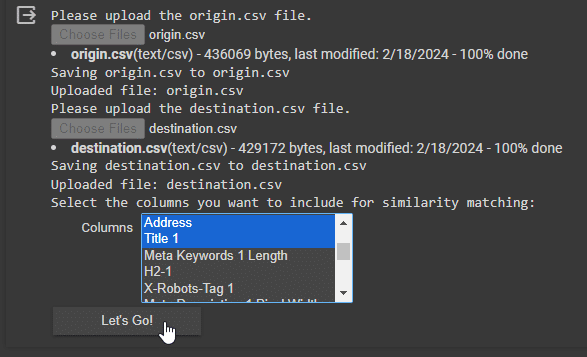

Step 6: Choose fields to make use of for similarity matching

What makes this script notably highly effective is the flexibility to make use of a number of units of metadata on your comparability.

This implies should you’re in a state of affairs the place you’re transferring structure the place your URL Deal with will not be comparable, you’ll be able to run the similarity algorithm on different components underneath your management, equivalent to Web page Titles or Headings.

Take a look at each websites and attempt to choose what you assume are components that stay pretty constant between them. Typically, I’d advise to begin easy and add extra fields if you’re not getting the outcomes you need.

In my instance, we now have saved an identical URL naming conference, though not an identical and our web page titles stay constant as we’re copying the content material over.

Choose the weather you to make use of and click on the Let’s Go!

Step 7: Watch the magic

The script’s fundamental elements are all-MiniLM-L6-v2 and FAISS, however what are they and what are they doing?

all-MiniLM-L6-v2 is a small and environment friendly mannequin throughout the Microsoft collection of MiniLM fashions that are designed for pure language processing duties (NLP). MiniLM goes to transform our textual content knowledge we’ve given it into numerical vectors that seize their that means.

These vectors then allow the similarity search, carried out by Fb AI Similarity Search (FAISS), a library developed by Fb AI Analysis for environment friendly similarity search and clustering of dense vectors. This can shortly discover our most related content material pairs throughout the dataset.

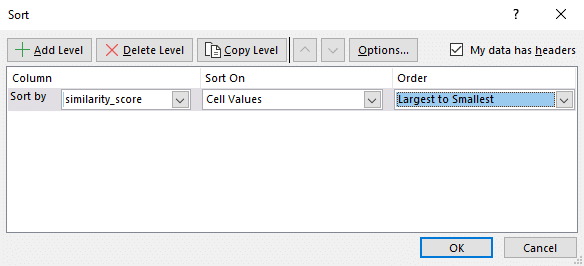

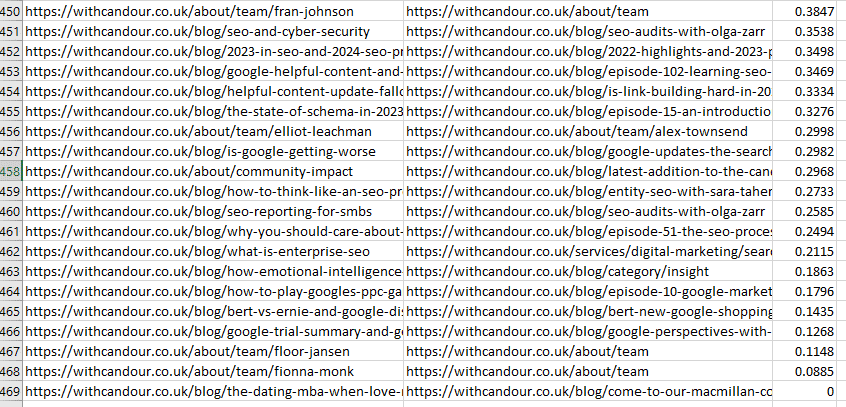

Step 7: Obtain output.csv and type by similarity_score

The output.csv ought to robotically obtain out of your browser. Should you open it, you need to have three columns: origin_url, matched_url and similarity_score.

In your favourite spreadsheet software program, I’d suggest sorting by similarity_score.

The similarity rating provides you an thought of how good the match is. A similarity rating of 1 suggests an actual match.

By checking my output file, I instantly noticed that roughly 95% of my URLs have a similarity rating of greater than 0.98, so there’s a good probability I’ve saved myself lots of time.

Step 8: Human-validate your outcomes

Pay particular consideration to the bottom similarity scores in your sheet; that is doubtless the place no good matches will be discovered.

In my instance, there have been some poor matches on the staff web page, which led me to find not all the staff profiles had but been created on the staging website – a extremely useful discover.

The script has additionally fairly helpfully given us redirect suggestions for previous weblog content material we determined to axe and never embrace on the brand new web site, however now we now have a steered redirect ought to we wish to cross the site visitors to one thing associated – that’s in the end your name.

Step 9: Tweak and repeat

Should you didn’t get the specified outcomes, I’d double-check that the fields you utilize for matching are staying as constant as doable between websites. If not, strive a distinct subject or group of fields and rerun.

Extra AI to come back

Normally, I’ve been gradual to undertake any AI (particularly generative AI) into the redirect mapping course of, as the price of errors will be excessive, and AI errors can typically be tough to identify.

Nevertheless, from my testing, I’ve discovered these particular AI fashions to be strong for this specific job and it has basically modified how I strategy website migrations.

Human checking and oversight are nonetheless required, however the period of time saved with the majority of the work means you are able to do a extra thorough and considerate human intervention and end the duty many hours forward of the place you’d normally be.

Within the not-too-distant future, I anticipate we’ll see extra particular fashions that can enable us to take further steps, together with bettering the pace and effectivity of the subsequent step, the redirect logic.

Opinions expressed on this article are these of the visitor writer and never essentially Search Engine Land. Workers authors are listed here.

[ad_2]